ChatGPT, and other AI chatbots, are arguably the most useful time saving tool since the invention of the computer – but there are certain details that you should never share with them, unless you’re happy for your private data to be potentially shared with the world.

Chatbots train on your data, so anything that you put into them could well be used to influence the next request they receive from a user. While most queries are unlikely to cause issues, sharing certain information could leave you exposed to fraud, or even jeopardize your job.

We explain some of the biggest things you should never share with ChatGPT and similar platforms, and how you can stop ChatGPT from training on your data.

Key Takeaways

- AI chatbots, including ChatGPT, train on the data you provide, and use this to inform future responses for other users

- When using an AI chatbot, it’s unwise to share personal, financial or company data

- Some companies, including Apple and Samsung, have actually banned the use of ChatGPT in some instances to protect company data

- So-called ‘private chats’ with AI chatbots may also be shared with other users or used in training

- Opting out of providing training data for AI chatbots isn’t always easy, or possible

7 things you should never share with ChatGPT:

1. Sensitive Company Data

If you haven’t opted out of ChatGPT storing your data, then anything you put into the platform is considered fair game, and could be used to train the LLM, as well as be to train its AI.

That also means information that might not strictly be yours, but the company you work for. There have already been examples of private company data being surfaced via ChatGPT, with one of the high profile examples being Samsung, who clamped down on use of the chatbot this year.

This just in! View

This just in! View

the top business tech deals for 2026 👨💻

In an internal memo, the company warned staff against using ChatGPT, after a security leak was traced back to an employee sharing sensitive company code on the platform.

Samsung aren’t alone either – plenty of other companies, including Apple, have banned ChatGPT for certain employees and departments.

Being responsible for exposing your company’s sensitive data could see you having a very awkward chat with HR, or even worse, fired.

2. Creative Works and Intellectual Property

Written the next great American novel and want ChatGPT to give it an edit? Stop. Never share your original creative work with chatbots, unless you’re happy to have them potentially shared with all other users.

In fact, even copyrighted woks aren’t safe. Chatbots like ChatGPT are currently embroiled in a number of legal cases from the likes of Sarah Silverman and George R. R. Martin, accusing them of training their large language models (LLMs) on their published writings.

Your next great idea could well be surfaced in a stranger’s ChatGPT results, so we’d suggest keeping it to yourself.

3. Financial Information

Just like you wouldn’t leave your banking or social security number on a public forum online, you shouldn’t be entering them into ChatGPT either.

It’s fine to ask the platform for finance tips, to help you budget, or even tax guidance, but never put in your sensitive financial information. Doing so could well see your private bank details out in the wild, and open to abuse.

It’s also extremely important to be vigilant of fake AI chatbot platforms which may be designed to trick you into sharing such data.

4. Personal Data

Your name, your address, your telephone number, even the name of your first pet…all big no nos when it comes to ChatGPT.

Anything personal such as this can be exploited to impersonate you, which fraudsters could use to infiltrate private accounts, or carry out impersonation scams – none of which is good news for you.

So, resist the temptation to put your life story into ChatGPT, and if you are determined to have it write your autobiography for you, think carefully about what you’re sharing.

5. Medical data

It might be tempting to turn to ChatGPT for some free medical advice. Googling symptoms is a guilty pleasure of many of us, and with a chatbot, you can not only find out what that strange bulge on your neck might be, but also what action you should take.

However, before you start uploading your entire medical history to the platform, ask if you’d really want it to fall into the wrong hands, or if you want OpenAI to train its chatbot on your personal medical information.

ChatGPT also isn’t compliant with US medical privacy laws, because that’s not what it was built to handle.

Privacy issues aside, it might also give you potentially harmful, or even deadly, advice.

6. Usernames and Passwords

There’s only one place you should be writing down passwords, and that’s on the app or site that needs them. Best practice states that storing unencrypted passwords elsewhere could leave you vulnerable.

So, if you don’t want your passwords to become publicly available, we’d suggest resisting the temptation to get ChatGPT to record all your passwords in one place to make them easier to find, or perhaps asking it to suggest stronger passwords for you.

If you’re struggling to remember your passwords (and lets face it, we all are), then a password manager is a great tool that takes the pain out of juggling multiple passwords at once.

If you want to test your existing passwords, there are plenty of free, secure tools that can do this for you.

7. ChatGPT Chats

So okay, we’ll admit this one is a slight oxymoron, and it would be very difficult to use ChatGPT without actually talking to it, but it does a great job of demonstrating the danger of entering absolutely anything into ChatGPT.

Yes, even your own ChatGPT requests could be shared with others, and it has happened in the past.

There have even been instances recently where a bug meant that ChatGPT users were seeing chats that other users had carried out with the chatbot.

There has also been evidence that Google’s Bard chatbot has been indexing chats with users, making them easy for anyone to find online.

In both cases, the companies promised to rectify the issues, but it illustrates how quickly the tech is progressing, and that nothing, not even the requests you put into the platform, can be considered private. It’s worth keeping this in mind whenever you’re conversing with a chatbot.

How Do I Turn off ChatGPT Data Training?

We’d suggest not sharing any sensitive data with chatbots such as ChatGPT full stop, but if you want to use these tools and not have them utilize your data for training, it is possible to turn off this setting.

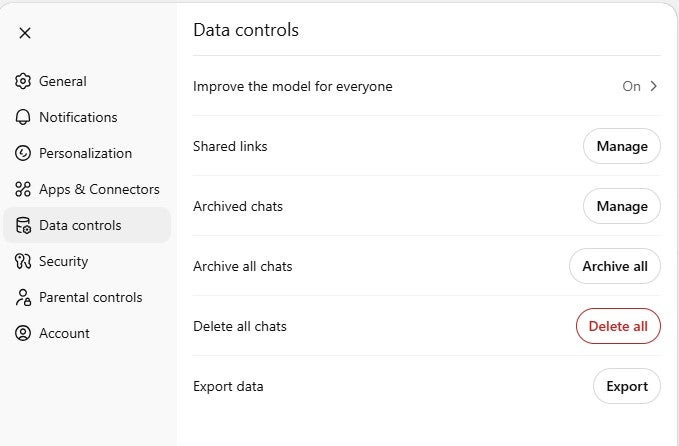

When logged into ChatGPT, navigate to the profile icon in the bottom left of the screen. Click on this to bring up the menu, then select ‘Settings‘. In this menu, select “Data control“.

You’ll see the option ‘Improve the model for everyone‘. This is set to ‘on’ by default, and it is what gives the greenlight to OpenAI to use your ChatGPT dialogue for training. Turn if off to protect your data.

What are the Risks of Sharing Private Information with AI chatbots?

ChatGPT is a powerful tool, and it can do lots for you to make your work, and personal life, easier and more efficient.

However, it’s important to remember that the information you share with it could well be used to train the platform, and may appear in other users requests, in various forms. You can opt out of having your data used by ChatGPT, and we’d suggest familiarizing yourself with how the platform uses your data.

Inputting sensitive data, either personal or commercial, runs the risk of having it shared outside of your control, as some companies have already discovered for themselves.

It’s also important to realize that anything shared in the past on the platform can be extremely difficult to permanently delete, so always use chatbots with caution, and treat them like a distance acquaintance, rather than a close friend.