Report: 48% of Fleets Want Asset Tracking Amid Cargo Theft Surge

Nearly half of fleet professionals aim to adopt asset tracking systems, with 48% saying they are looking to get the tech.

Nearly half of fleet professionals aim to adopt asset tracking systems, with 48% saying they are looking to get the tech.

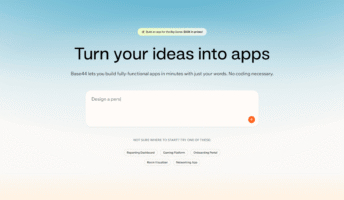

Base44 added a whole bunch of new features to its vibe coding platform after its Super Bowl ad.

Our recent data suggests that logistics professionals are more influenced by government policy than sustainability goals.

Gartner has published its cybersecurity predictions for 2026 — and companies need to tighten up their AI agent oversight.

Courses are available from big tech firms like Google and Microsoft, as well as online education platforms like Udemy.

AI coding is big right now, and LinkedIn has given employers a way to track down workers with the exact skills they need.

Report suggests that AI brings new strengths to cybersecurity and more sophisticated risks.

Only 4% of employees said that they use AI on a daily basis at work in 2023.

There's a new AI website builder in town — Wix Harmony. Here's everything you need to know about the latest Wix innovation.

This year, the landscape of cybersecurity will never be the same. Here's what to watch for, from data surges to AI malware.

Marc Benioff compared the technology to social media in regard to its negative impact on young users.

A recent survey has found that while some CEOs are seeing gain from AI, others are yet to see any financial return.

The fear of AI job replacement is very real, with many companies openly admitting that the tech is eliminating jobs.

We've been tracking the AI errors and mistakes that have made the news over the last few years, so you don't have to.

Small startups and huge corporations alike have been consistently impacted by data breaches over the last few years.

A new report predicts AI will be front-and-center as consumers opt for more personalized hospitality experiences.

Frequent users are shouldering much of the burden of fixing the errors too, according to the data.

Slack has given Slackbot a makeover, with the newly enhanced AI program beginning a phased rollout across January and beyond.

Kick off your new year with coding: Here are the top online courses and materials to help you craft an app from scratch.

It's hard to deny that AI is a bit dimwitted at times, but businesses still need it to stay competitive in 2026.

Researchers predict that hackers will target AI agents this year. Here's how you can keep your business safe.